Mobile delivery robots need to maneuver through unstructured environments and navigate around wavering bikes, parked vehicles, or other roadside hazards. This requires leveraging AI models that can absorb large amounts of information. Transformers have made it possible to do this in the context of Large Language Models (LLMs). We have found that the same is true for building capable neural robot navigation models. Analogously to how LLMs operate on word tokens, our neural navigation model operates on image patches. The model is trained to solve multiple pretext tasks, including learning a path planning policy in simulation. The resulting end-to-end trained mobility agent is not only capable of navigating in multiple challenging scenarios in simulation but also transfers surprisingly well to the real world and is capable of running in real time onboard our robot, powered by NVIDIA Accelerated Computing.

Interpretability Through Attention

A key challenge with end-to-end models is interpreting their behavior and understanding why a model took a particular action. We have found that for a neural navigation model built using transformer blocks, the transformer’s attention mechanism provides a window into the model’s “thinking” and can be used to interpret the actions taken by it. For instance, we trained a neural navigation model consisting of two parts:

- A vision transformer, which learns how to see; and

- A policy transformer, which learns how to move.

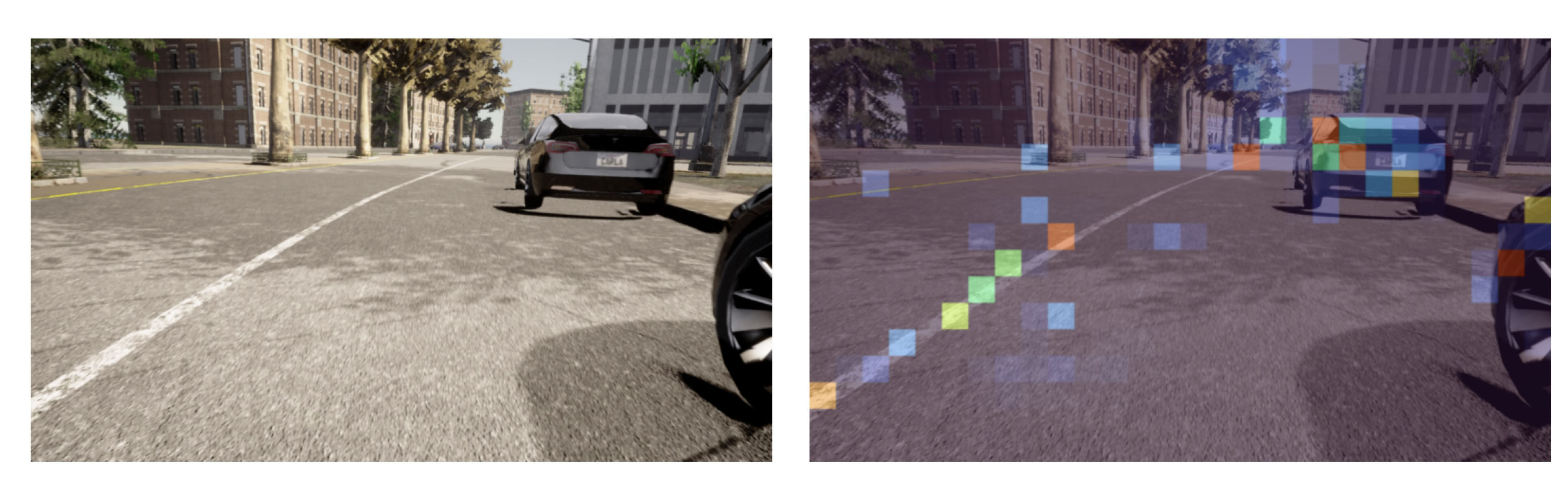

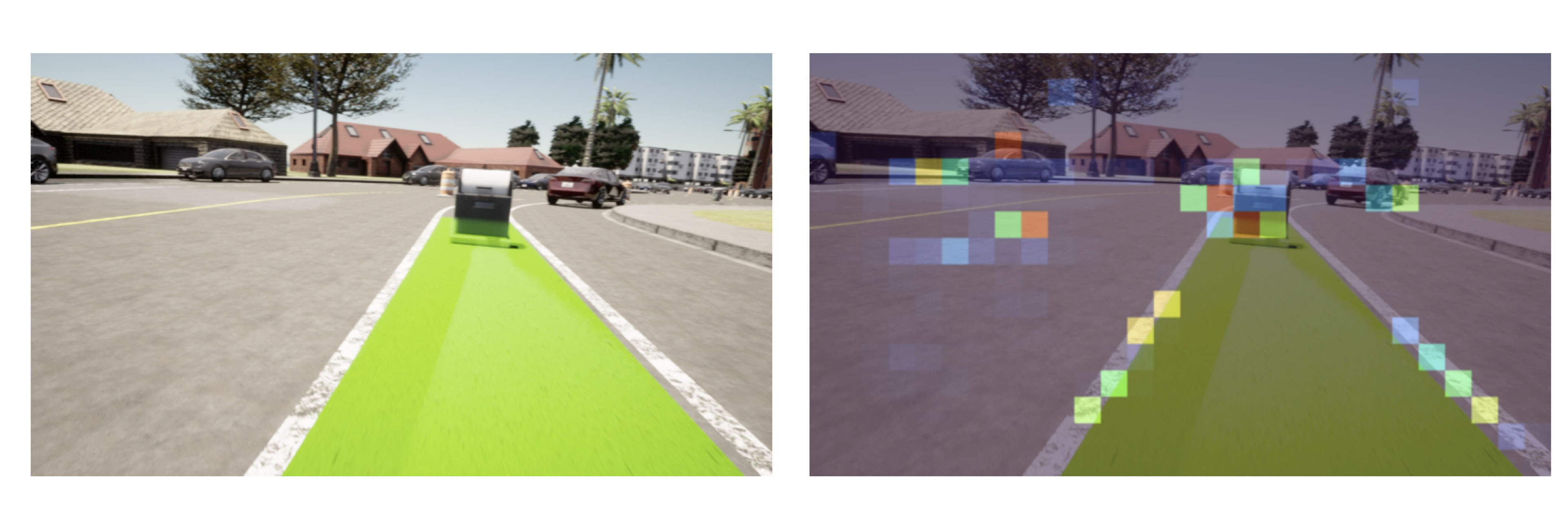

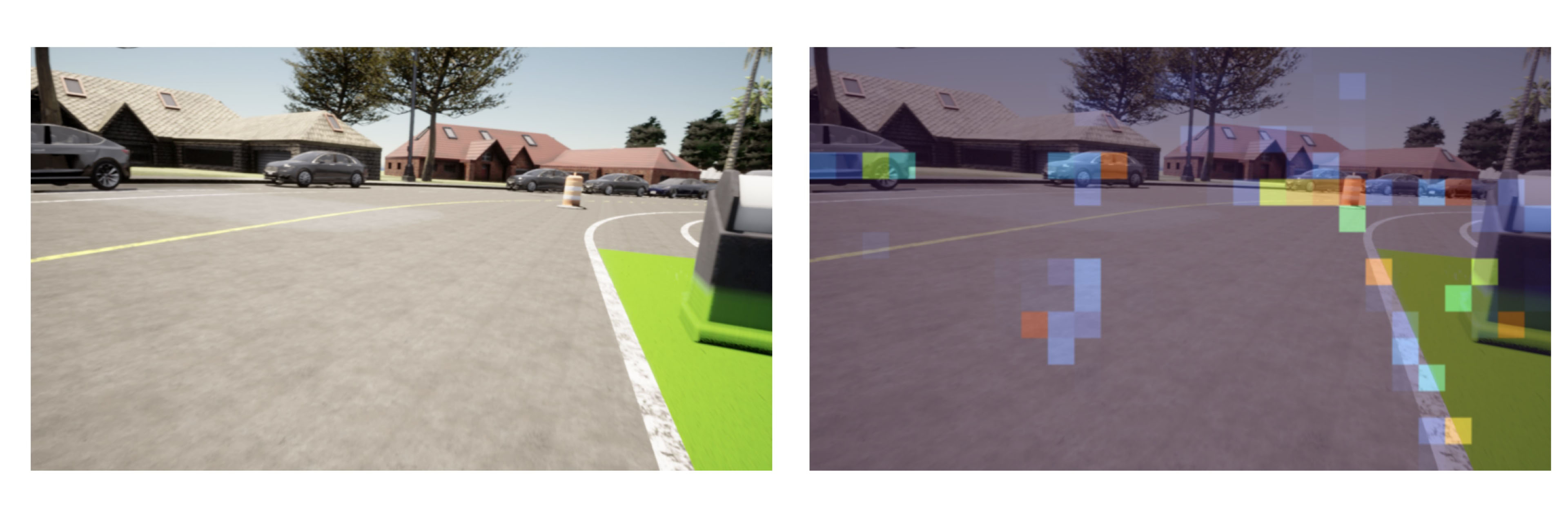

The vision transformer takes camera images divided into square patches as inputs and extracts a visual representation using self-attention. This learned visual representation is then queried via cross-attention in our policy transformer. During inference, the attention weights in the policy transformer can be used to interpret where the model was paying attention at each time step. This is used to interpret why the agent undertook a certain behavior. For instance, in the image below, we can see that the agent’s planned path is most strongly influenced by the parked car and the lane boundary.

Sim2Real Transfer

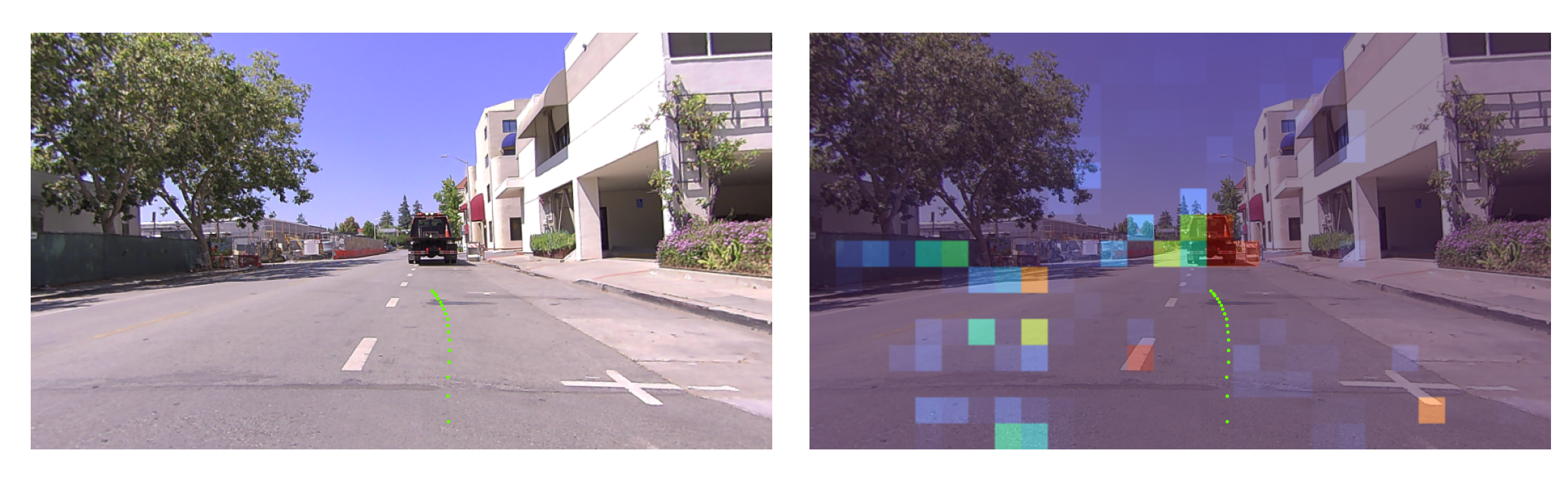

Another key result is that the navigation policy also transfers to the real world. The image below shows that the model attends to the left lane boundary, parked cars and an oncoming vehicle when navigating through a bike lane.

The ability to effect sim2real transfer bodes well for the scalability of our approach because we can create virtually unlimited amounts of relevant and adversarial training data using very open source mobility simulators, and not have to rely on extensive real-world data collection and labeling infrastructure.

Runtime

Finally, we have found that transformer-based driving agents can run quite efficiently on current state-of-the art embedded SOCs. Our real-time inference of the neural path planning model relies on an NVIDIA Jetson AGX Orin, which runs on board our delivery robot. The AGX Orin is capable of up to 275 TOPS in INT8, which provides sufficient compute power and low latencies for such advanced mobility agents.

We are hiring!

We are hiring ML and Simulation Engineers to scale up the training of our driving agent. If you are interested in working with us, please reach out at careers@vayurobotics.com.